Building a Demo FaaS Platform with Resonate

Showcasing Durable Executions for On-Premise Workloads

When it comes to distributed systems, durability, fault tolerance, and ease of development are often at odds. Writing reliable, distributed applications can feel like herding cats—except the cats are servers, and they occasionally crash or lose network connectivity. At Resonate we are trying to bridge the simplicity of async/await to durable executions, making it easier to build robust distributed systems.

In this post, we’ll walk through a demo Function-as-a-Service (FaaS) platform built with Resonate. This isn’t a full-fledged FaaS platform (let’s not reinvent the wheel entirely), but rather a showcase of Resonate’s capabilities. We’ll focus on how Resonate simplifies complex distributed systems tasks like retries, routing, and fault tolerance, which are very important aspects of FaaS platform, all while keeping the developer experience smooth and intuitive.

Why Build an On-Premise FaaS Platform?

Function-as-a-Service (FaaS) is a cloud computing model that lets developers deploy individual pieces of code—called functions—without managing servers or infrastructure. These functions are triggered by specific events, like an HTTP request, a file upload, or a database update. The cloud provider (e.g., AWS Lambda, Azure Functions) automatically handles scaling, resource allocation, and runtime environments. You pay only for the compute time your functions consume, in millisecond increments.

The "serverless" nature of FaaS means developers focus purely on writing code to solve problems, while the provider abstracts away servers, virtual machines, and containers. However, "serverless" doesn’t mean there are no servers—it means you don’t see or manage them.

The idea of building an on-premise Function-as-a-Service (FaaS) platform might seem counterintuitive. Isn’t the whole point of FaaS to be “serverless,” abstracting away the infrastructure so you don’t have to worry about servers? The answer lies in the unique benefits that an on-premise FaaS platform can offer, especially for specific use cases like GPU and AI workloads or sensitive data processing.

Control Over Infrastructure: cloud-based FaaS platforms are great for many use cases, but you’re constrained by the provider’s runtime environments, resource limits, and scaling policies.

Security and Data Privacy: For organizations handling sensitive data, keeping everything on-premise is table stakes. With an on-premise FaaS platform, your data never leaves your premises, reducing the risk of breaches or compliance issues.

Predictable Costs: Cloud-based FaaS platforms often come with variable costs that can spiral out of control if your workloads scale unexpectedly. No more surprise bills because your functions went viral at 2 a.m.

Why Resonate?

Resonate is designed to make distributed systems as straightforward as writing async/await code. This property makes it great to write an on-prem FaaS platform as it abstracts away the complexities of distributed systems, providing:

Distributed Async/Await: Write functions as simple

async/awaitcode while Resonate guarantees crash-resistant execution.Routing: Tasks are directed to the right workers seamlessly, thanks to Resonate’s task framework and distributed event loop. This allows the FaaS platform to direct the workload based on user input or other defined criteria.

Durability: Automatic retries and built-in replayability ensure functions survive hardware failures and network issues

These features make Resonate an excellent choice for building our demo FaaS platform, especially for on-premise use cases where control, security, and cost predictability are critical.

The Demo FaaS Platform

Our demo FaaS platform consists of two main components:

A CLI application that submits scripts for execution.

A worker that executes the scripts, with support for GPU workloads.

Let’s dive into the code and see how Resonate makes this possible.

The CLI Application

The CLI application enable users to submit scripts for execution. You can get the full version of the code here.

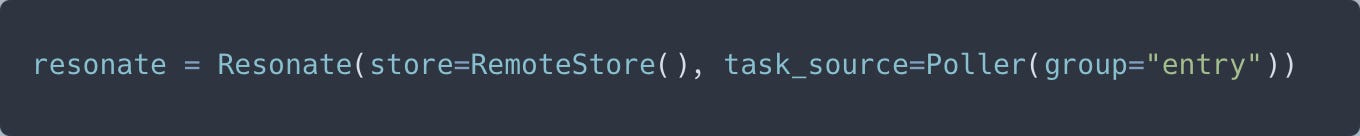

In this code:

resonate is the instance we use to interface with resonate. For this specific node we define the group as entry and use the default connection values.

execute is the function that handles the actual execution of the script. Please note that execute does not need an implementation in this file, given that it will be run remotely. You can think of this like a function definition in C (the programming language), that enables the Resonate Context to understand the function arguments and return types but the implementation of the function will be provided by the worker node

prep_execute reads a script from a file and submits it for execution. Using the register decorator we can define a custom retry policy, in this case we do not want to retry, so we use never(). This is also a good moment to talk about Resonate’s structured concurrency. By default resonate functions enforce structured concurrency, that means that any concurrent execution started in a specific Context will be implicitly awaited before exiting such Context. In this case we are explicitly awaiting the result of running the script in a gpu worker.

Resonate’s yield can be thought of like await in other runtimes. Using yield and generators instead of await and async functions enable us to have full control over the execution and its delimitations, which is an important feature for deterministic execution of the resonate functions. Resonate’s options mechanisms handle routing and retries, ensuring that tasks are sent to the appropriate workers (in this case, GPU workers) and retried when necessary.

The Worker

The worker is responsible for executing the scripts. You can get the whole code for the worker here:

In this code:

The execute function runs the script in a sandboxed environment, ensuring isolation and security. This is the actual implementation of the execute definition on the previous snippet. Note that when registering this function we are not providing a explicit retry policy, in this case it will use the defaults.

The worker registers itself as a Poller when creating a Resonate instance, that listens for tasks assigned to the gpu group. gpu is an arbitrary group name given to this specific worker node. Which is referenced by the CLI application when invoking the execute function remotely. result = yield ctx.rfc(execute, content, id).options(id=id, send_to=poll("gpu"))

Output and errors are saved to files for retrieval later. We use this value to resolve the promises associated with this function. We could return the whole stdout of the execution, and for small loads this might work, here we showcase a more scalable approach.

You can review the complete project here. The modulate.py, worker.py the resonate server, is all you need for our Simple on-prem Faas platform. Please see our server quick start on how to install and run the Resonate server locally, or just run these two commands:

> brew install resonatehq/tap/resonate

> resonate serveWrapping Up

While this demo isn’t a full-fledged FaaS platform, it highlights Resonate’s strengths in building reliable, distributed systems. Resonate abstracts away some of the hard parts of Distributed Systems like retries and routing, while enabling the user to access them and overwrite the default when needed.

Whether you’re running GPU workloads like AI training or inference or building custom on-premise solutions, Resonate provides the tools you need to simplify development and ensure robust distributed systems.

If you’re interested in exploring Resonate further, check out the official documentation at resonatehq.io and give it a try in your next project. And if you build something cool, let us know— we’d love to hear about it!