Resonate Server + Deterministic Simulation Testing

The Resonate Server undergoes unit tests, DST, and Linearizability checks

Why test software?

We test software to determine its reliability when developers and users rely on it.

It might seem like an obvious answer, but not all software is equal, and therefore not all software insists upon the same level of rigour in determining its reliability.

On top of that, not all testing strategies are equal.

Consider a dog name generator you find on the internet. I think most folks would argue that it’s a fairly non-consequential piece of technology. If it malfunctions, you probably wouldn’t be that surprised, and the consequences of that are very minimal. The situation does not insist upon a rigorous test of the software’s reliability.

Consider a popular ride hailing app. You could argue that this is a somewhat consequential piece of technology. If it malfunctions, you could miss a flight or be late to work. You and many others rely on it and thus expect it to work. The company running it would surely have an understanding of the cost related to malfunctions, and the benefit to offsetting that with investments into testing. If it doesn’t work, you are likely to switch to a competitor. The situation demands that testers validate the software for reliability.

Now consider space shuttle navigation software or a pacemaker. If the software does not behave properly in these technologies, it is a matter of life and death for the people involved. It is hard to put a value on the consequences of malfunction in these instances. The situation demands rigorous testing so that the software remains reliable.

The heavier the consequences of malfunctioning software, the more we rely on it and expect it not to malfunction. Therefore, the heavier the consequences the more the situation demands for rigorous testing of reliability.

We want developers to trust the Resonate Server in their technology stacks across a wide range of use cases. We want the Resonate Server to reliably serve distributed applications.

In this post, we explore how our testing strategy ensures the Resonate Server remains reliable.

What testing methods are used?

We test the Resonate Server using a variety of methods:

Unit Tests

Deterministic Simulation

Linearizability

Most folks reading this will likely already be very familiar with unit tests. Unit tests play a role in an overall testing strategy, but they often fail to detect issues that emerge in production-like conditions.

Rigorous reliability testing, especially in distributed systems, requires methods that simulate complex conditions.

Right now, Resonate employs a cutting-edge combination of Deterministic Simulation Testing and Linearizability Checking.

What is Deterministic Simulation Testing?

Without being too formal, Deterministic Simulation Testing (DST) simulates a broad set of conditions in a controlled way, ensuring reproducible test results.

What is Linearizability Checking?

Again without being too formal, Linearizability Checking verifies whether the system maintains correctness under concurrency.

What does this look like for the Resonate Server?

Practically, a testing harness first sends many different requests to the server over a period of time in a controlled way (DST). Then, DST generates request-response pairs, which the system runs through a Linearizability Checker, in this case, porcupine.

How are the conditions simulated in a controlled way?

Several design aspects of the server enable controlled and reproducible simulations.

The server’s single-threaded kernel acts as a scheduler, controlling execution on each tick of the system and processing steps sequentially.

The server uses coroutines, allowing functions to yield control to the scheduler as needed. This enables the scheduler to execute explicit steps on each tick.

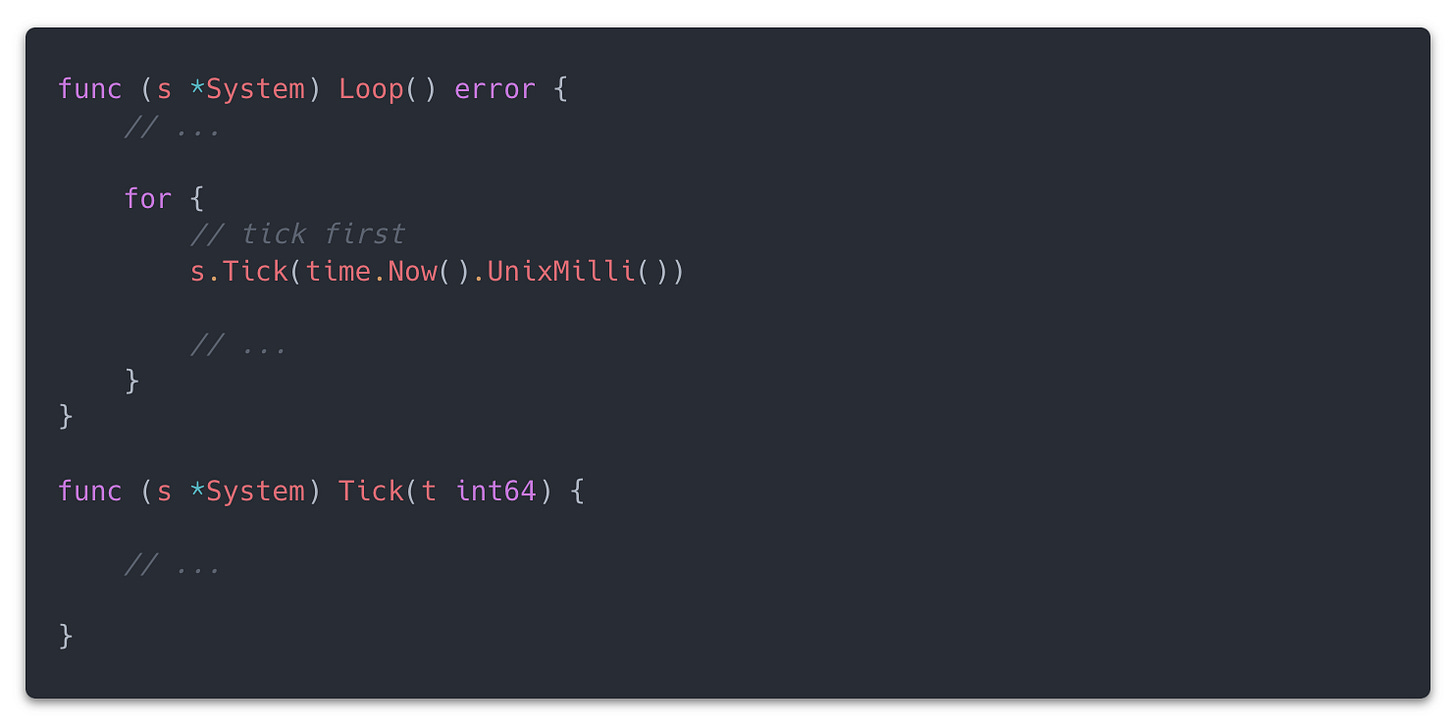

The kernel provides a Tick() function as a hook, allowing the testing harness to control the scheduler’s progress directly.

When the server runs normally, the scheduler ticks forward every millisecond.

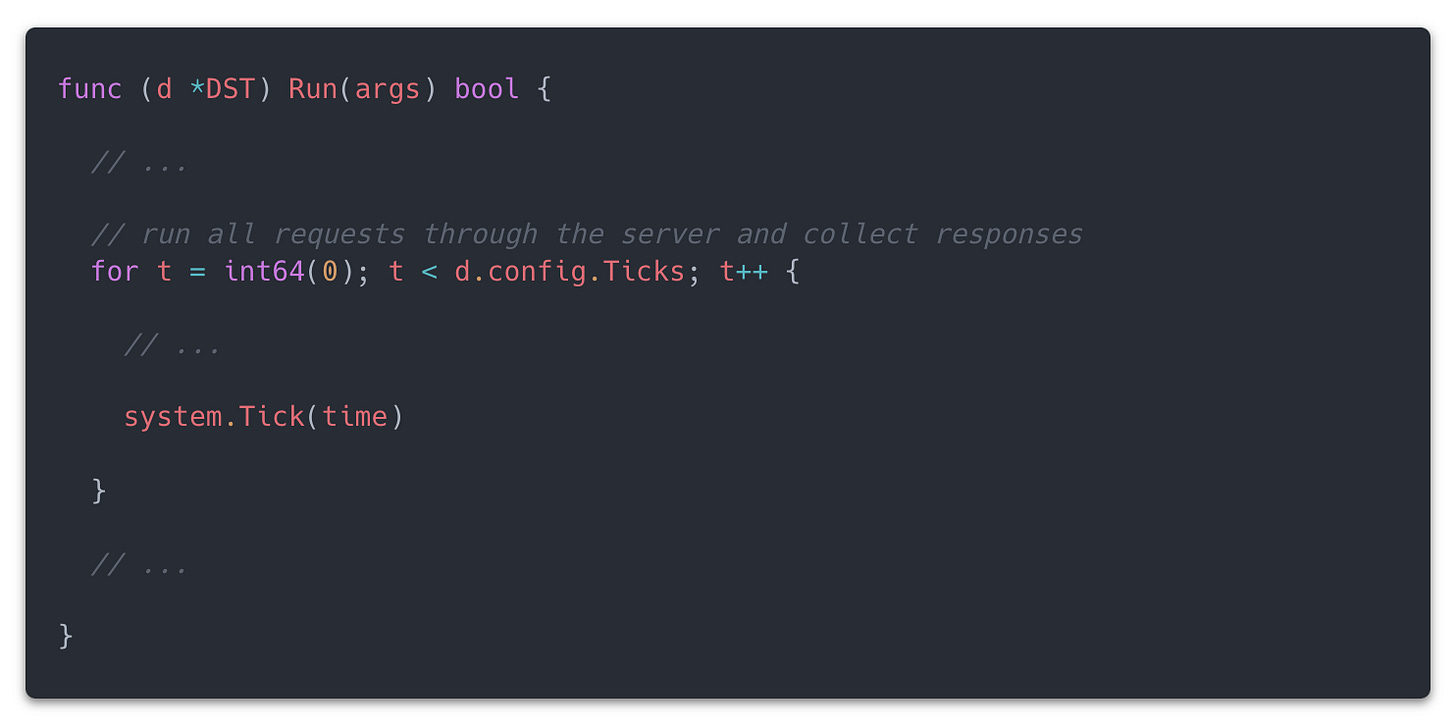

When the testing harness runs the simulation, it directly controls the scheduler’s ticks and timestamps.

These design aspects of the Resonate Server allow the testing harness to control time (through timestamps), system progress (through ticks), and outgoing requests to the server.

The testing harness stops ticking the system once it processes all requests and receives responses.

To ensure reproducibility, each simulation accepts a seed—an integer that deterministically generates all other consequential entity IDs in the system. If the algorithm for generating IDs is deterministic, then the DST run and the Linearizability Checker also produce deterministic results, allowing reproduction using the same seed later.

How do simulation tests find issues?

The Resonate Server extensively uses assert statements throughout the codebase to detect invalid conditions.

Using assert statements this way forces developers to anticipate and prevent undesirable conditions, leading to fewer errors reaching a DST run.

When running DST, we detect issues when an assertion error causes a test failure—just like a unit test, except the unit is the entire system operating under complex conditions with fully reproducible results.

If a DST run encounters an assertion error, it does not proceed to the Linearizability Check. So, DST runs need to be consistently passing before Linearizability Checks become relevant.

Once DST runs are passing consistently, guaranteeing Linearizability becomes the next milestone.

During the DST run, the testing harness sends requests to the server and its internal components. The testing harness pairs each request with its response. At the end of the DST run, the testing harness submits the entire set of pairs to a Linearizability Check. Though, due to the exponential nature of combinations that need to be checked as the data set grows, typically the data is partitioned in a meaningful way so that the check actually has a chance of finishing.

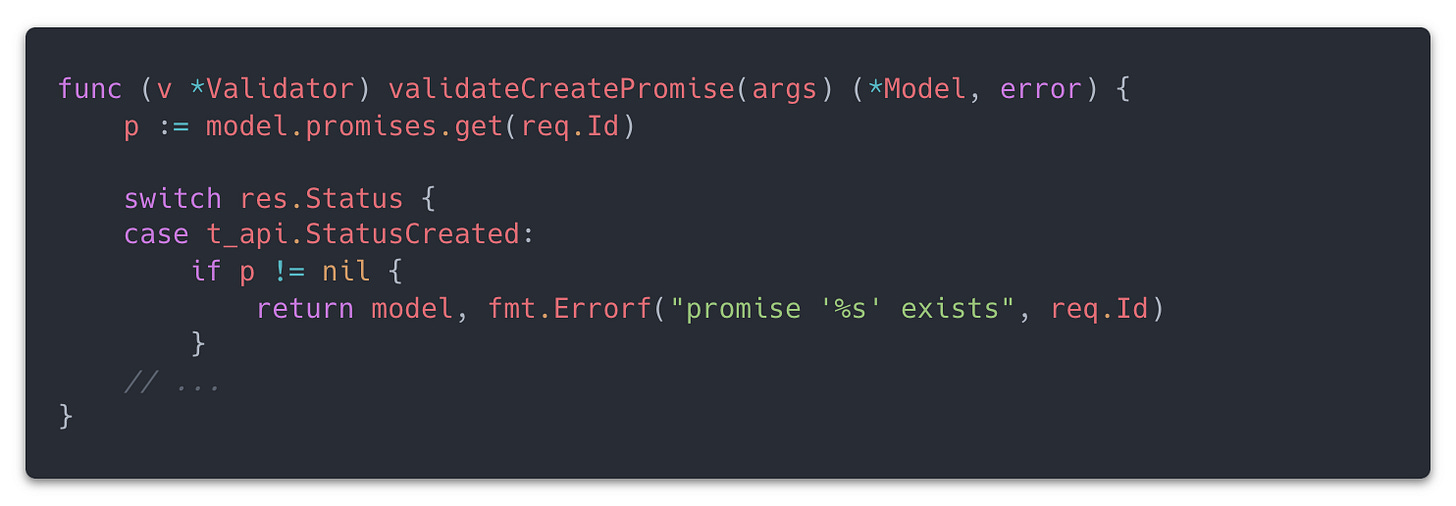

Practically, this means that the system validates each response against a model of the partitioned data, constructing the model dynamically as it processes responses.

For a somewhat hand-wavy example, let’s say that the very first response that is validated is for a request to create a promise on the server. The system enforces unique IDs for each promise. When processing a creation request, the server first checks the promise store to determine if the ID already exists. Therefore, the validator enforces the system’s requirements by checking the model for an existing response related to the promise creation request.

If there is, then that gets flagged, because there shouldn’t be one. If there isn’t, it is a good check. But in either case, the response is added to the model and the next response is checked against its partition’s model with it’s respective validator.

Validators play a critical role in detecting system issues. Only an engineer with deep knowledge of the system’s intended behavior can craft them effectively.

If the validators function correctly and the Linearizability Checker detects an anomaly, it could indicate a bug or an unaccounted-for system behavior.

At this stage, as DST runs consistently pass without assertion errors and Linearizability Checks validate system correctness, we gain confidence that the software behaves as expected, even under rare operating conditions.

Does this replace traditional stress testing?

Developers commonly test software rigor by deploying it and subjecting it to various scenarios, a process known as stress testing.

Even when using DST and Linearizability, teams can still employ traditional stress testing as a complementary method. However, its focus shifts from finding bugs (testing for consistency) to understanding throughput (testing for performance).

Several key differences between stress testing and DST + Linearizability make stress testing less effective for ensuring consistency.

Cost: Simulations run in a single process, but stress testing requires a production-grade environment with real network requests, making it significantly more expensive.

Coverage: Stress testing limits you to scenarios you can imagine, making it difficult to anticipate real-world failures. Additionally, manually crafted scenarios run in real-time, while simulations allow hundreds of runs in the time required for just a few manual tests.

Reproducibility: Stress testing does not guarantee reproducible results.

If you use DST + Linearizability but still want to evaluate your software’s performance under varying loads, stress testing remains useful. However, it becomes much less necessary for verifying correctness.

Conclusion

For the Resonate Server, ensuring reliability means going beyond traditional unit tests. While unit tests catch small, isolated issues, they fall short when it comes to simulating real-world production conditions. To achieve a high level of confidence in consistency, we employ Deterministic Simulation Testing (DST) and Linearizability Checking. DST enables controlled, reproducible testing of complex scenarios, while Linearizability Checking ensures correctness under concurrency. Together, these methods allow us to rigorously test the system under a wide range of conditions and identify issues in a fully reproducible way.

Traditional stress testing still plays a role, but its focus shifts from correctness to performance evaluation. DST and Linearizability allow us to efficiently validate how the system behaves under rare, production-like conditions, ensuring correctness at a fraction of the cost and effort. Once these tests consistently pass, we gain strong confidence in the Resonate Server’s reliability as a foundational component in distributed applications.

By prioritizing rigorous, deterministic, and correctness-focused testing, we ensure that the Resonate Server meets the reliability standards that developers and end users expect.